AlphaGO, Two Triangles, and your Political Intuitions Walk into a Bar with Jordan Ellenberg

There’s never going to be a final answer to ‘who’s watching who’, like who’s in charge and who’s subservient. I think in the end, there’s always going to be an interplay between the human and the device…You have to accept that there’s going to be iterations of responsibility and supervision between the human and the machine.

Intro

In this episode of The Decision Corner, Brooke speaks with Jordan Ellenberg, best-selling author and Professor of Mathematics at the University of Wisconsin at Madison. Through his research and his books, which include How Not to be Wrong, and more recently, Shape: The Hidden Geometry of Information, Biology, Strategy, Democracy, and Everything Else, Jordan illustrates how abstract mathematical and geometric equations can be brought to life, and indeed used to address some of the major challenges facing the world today. In today’s episode, Jordan and Brooke talk about:

- The different types of ‘games’ or problems that people and society in general face, and why they require different types of solutions.

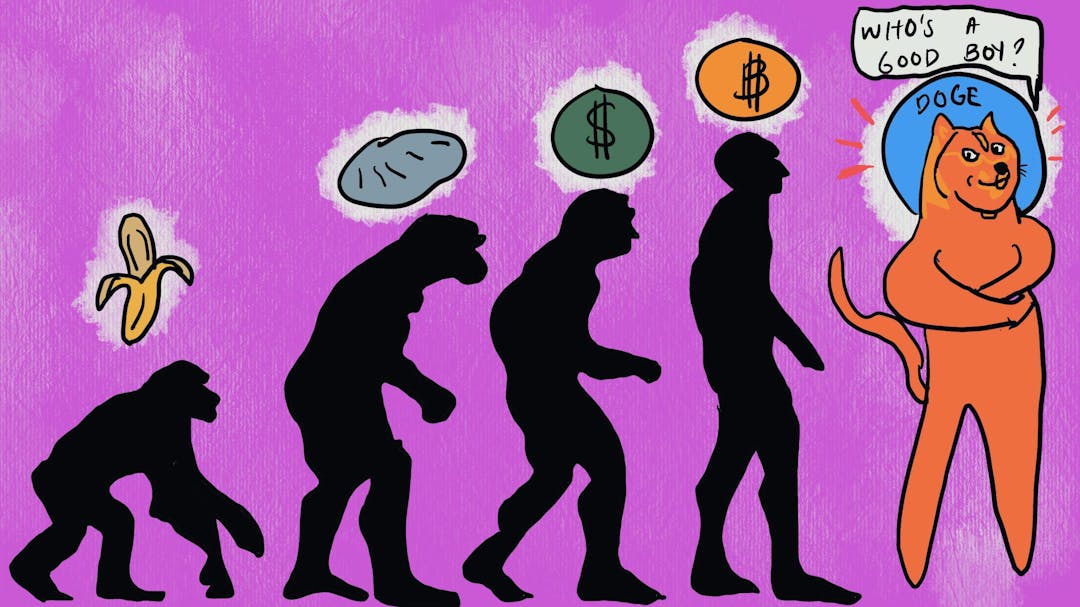

- The interplay between human decision-making and AI or algorithmic approaches, and understanding which methods should take precedence in which situations.

- Gerrymandering, electoral system design and finding better ways to facilitate democracy.

- Understanding how AI can be an effective safeguard when we humans develop the rules of the game.