Why do people support their past ideas, even when presented with evidence that they're wrong?

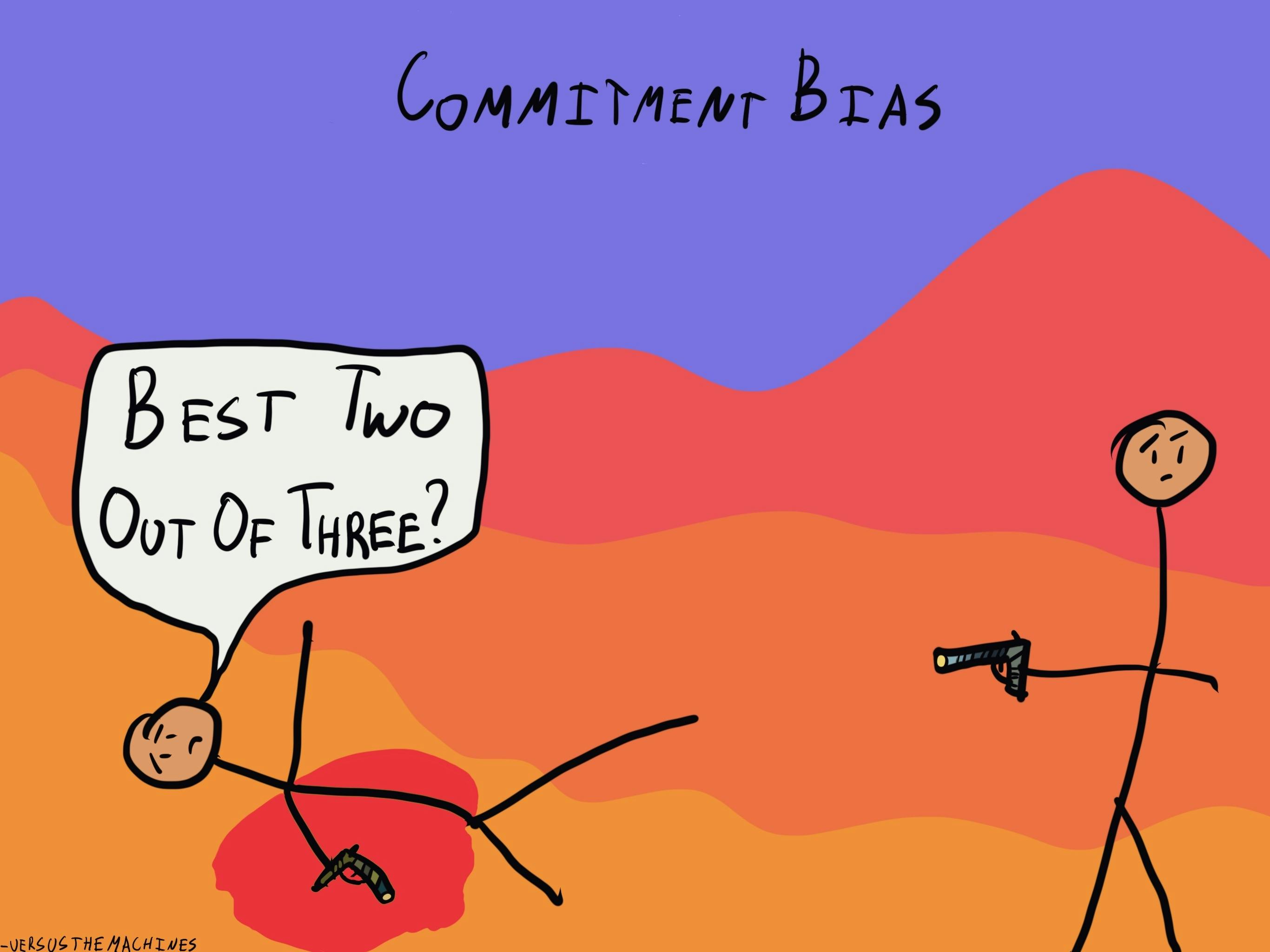

Commitment Bias

, explained.What is the Commitment Bias?

Commitment bias, also known as the escalation of commitment, describes our tendency to remain committed to our past behaviors, particularly those exhibited publicly, even if they do not have desirable outcomes.

Where this bias occurs

Imagine you’re wrapping up your first year of university, majoring in anatomy and cell biology. Your goal has always been to attend medical school and become a doctor, so it came as no surprise to anyone when this was the path you chose.

During your first semester, you enrolled in an elective course about the history of modern Europe. While you didn’t necessarily dislike your core anatomy classes, you found yourself deeply engaged in the history elective that you took on a whim. You enjoyed it so much, in fact, that you decided to take a couple of other history courses in your second semester and dedicated your free time to researching the concepts discussed in class. Throughout the year, a voice in the back of your mind has been pushing you to change your major and to get a Bachelor of Arts in history. However, this decision goes against your long-term goals and everything you’ve ever said about yourself. There’s nothing wrong with changing your mind, yet you feel pressured to keep things consistent. Your hesitation to change your major, even though it’s what you truly want to do, is the result of commitment bias.

Debias Your Organization

Most of us work & live in environments that aren’t optimized for solid decision-making. We work with organizations of all kinds to identify sources of cognitive bias & develop tailored solutions.

Individual effects

The feeling that our future behaviors must align with what we have said and done in the past severely compromises our ability to make good decisions. This is especially true when our past choices have led to unfavorable outcomes. Furthermore, it can be problematic when our past behaviors no longer align with our current values.

Refusing to change one’s stance may not only lead to undesirable results, but can also act as a barrier to personal growth. The ability to acknowledge flaws in our past behaviors to better ourselves is incredibly adaptive. It will ultimately gain us greater self-insight and help us make decisions more critically and logically.

Systemic effects

Commitment bias can become an even greater issue when someone in a position of power exhibits it. It has been suggested that this occurs in organizations where the decision-maker is questioning their status in the social hierarchy. Researchers also contend that this behavior is common when governmental policies are being put forth and the person tasked with making the decision is “anxious about [their] standing among constituents.”1 Since commitment bias can result in poor decision-making and these kinds of decisions are often important ones, this is cause for concern.

How it affects product

Commitment bias is incredibly prevalent in new product development. Research from Jeffrey B. Schmidt and Roger J. Calantone suggests that those who initially assume leadership roles in the product pipeline are less likely to give up on a product, even when the cost exceeds the value. Not only that, but those who initiate the project are less likely to see its failures compared to those who take leadership later on in product development.11 To avoid this, it is important to continue to seek input as the product develops. By having more people “on the outside” later join the team, one can achieve a varied perspective that can save the company time, money, and resources.

Commitment bias and AI

Artificial intelligence may not be as susceptible to psychological phenomena as humans are, but fallacies similar to commitment bias can still occur. Specifically, “overfitting” seems to be the machine equivalent of the commitment bias. Overfitting occurs when a machine learning model is trained excessively on a specific data set. The training is so rigorous that the model cannot make accurate predictions based on new data. Its commitment to past data impacts decision-making, leading to inaccurate predictions and poor performance. Similarly, commitment bias causes us to rely on our past behavior and values to inform our future choices.

Why it happens

We are susceptible to commitment bias as we are constantly trying to convince ourselves and others that we are rational decision-makers. We do so by maintaining consistency in our actions, as well as by defending our decisions to the people around us, as we feel that this will give us more credence.

Keep it consistent

Early research suggested that commitment bias is partly the result of self-justification. Not only to justify our behavior to ourselves, this bias can also be used to justify our actions when confronted by others.

The reasonable thing to do when our decisions result in unfavorable outcomes would be to face the consequences and learn how to make better choices going forward. However, this is not always what occurs. When our behavior has negative effects, we often change our attitudes toward the outcome.2 For example, if a participant is told to perform a tedious task without sufficient compensation, they will attempt to come up with another reason as to why they participated in the experiment. They may convince themselves that they enjoyed their experience, even if the task was purposefully designed to be as dull as possible. The individual does this to reduce cognitive dissonance, a theory put forth by Festinger.3

Barry M. Staw, who was the first to study and describe commitment bias, posits that this attitude shift results from a need for consistency, which seems to act as a motivator for humankind in general.4 A lack of consistency causes the uneasy feeling associated with cognitive dissonance. By “changing” how we feel about the outcomes of our behavior, we eliminate that inconsistency and, by extension, our discomfort.

Saving face

Not only do we attempt to justify our behaviors to ourselves, but we also try to make others see our behaviors as rational. In an attempt to save face, we may defend our behavior to others by trying to convince them that our choice was not bad after all. We may suggest that, while the immediate outcome was unfavorable, this decision will be beneficial in the long term.5 This is similar to confirmation bias, a cognitive bias that describes how we selectively look for information that supports our stance while ignoring information that discredits it. In the case of commitment bias, we cherry-pick for information that makes our decision seem like a good one while minimizing, or even disregarding completely, evidence that suggests we made the wrong choice. Thus, commitment bias has more to do with our commitments and actions and less to do with our beliefs.

While our shared desire for consistency pushes us to justify our behavior to ourselves, our need to be considered rational and competent by others motivates us to defend our behavior publicly. This causes us to remain committed to our initial decision because we feel that to do otherwise would call our ability to make sound decisions into question.

Why it is important

Being aware of commitment bias is an advantage in more ways than one. By becoming aware of it, we can begin working towards avoiding it. Since this bias can cause us to make poor decisions, avoiding it can be advantageous. Dismantling this bias is a starting point for personal growth, as doing so allows us to admit when we have made a mistake and learn from our past behavior.

Robert Cialdini describes a second way in which awareness of commitment bias can benefit us in his Six Principles of Persuasion.6 Cialdini identified principles that, when used ethically, can increase your persuasiveness. One of these principles is consistency, a factor that underlies commitment bias. Cialdini explained that by having people make a small commitment early on, you increase the chances of them agreeing to make a more significant commitment later. There are many areas where this knowledge of commitment bias may be useful. It can help with anything from making a sale to persuading someone to keep up with their annual visit to the doctor.

How to avoid it

Avoiding commitment bias isn’t always easy. For one thing, it involves going against our natural drive for consistency. For another, it causes us to worry that others will think poorly of us for making bad decisions.

The first step to avoiding commitment bias is recognizing that consistency isn’t the be-all and end-all. If you find that certain past behaviors of yours no longer align with your goals or values, there’s no reason to remain committed to them. We’re allowed to grow and change – in fact, it’s encouraged that we do so. Making decisions based solely on a desire for consistency or a fear of shaking things up is irrational. Conscious recognition of that can help us avoid this type of behavior in the future.

Furthermore, while we worry that others will think less of us if our decisions lead to negative outcomes, people tend to have more respect for those who can admit that they made a mistake. That being said, there will always be people who disagree with you, so why worry about that? Think back on the example of the college student who is putting off changing their major because they’re worried people will judge them. Perhaps they’re right; they may have friends or family who are surprised or even disappointed by the change. However, the decision to change their major affects no one but themselves, so why should they let the judgment of others hold them back? The key to avoiding commitment bias is to focus on the good that will come from changing your behavior instead of worrying about what others will think of you.

How it all started

The first description of commitment bias came from Staw’s 1976 paper, “Knee-deep in the big muddy: a study of escalating commitment to a chosen course of action”.7 He illustrated how, when our decisions have negative consequences, it seems like the obvious course of action would be to try something else instead, yet, these undesirable outcomes often serve to ground using our initial choice. Staw used the literature on forced compliance studies to support this hypothesis, by explaining how, in these kinds of experiments, participants often try to justify their behavior.

Staw tested his theory by having participants read a case study and make decisions about the allocation of funds within a company in a hypothetical situation. The experimenters manipulated the consequences of the participants' decisions. They were either placed in the “positive outcomes” condition or the “negative outcomes” condition. For those in the former condition, the outcome of their decision about allocating the business’ money was favorable. For those in the latter condition, the outcome was unfavorable; they were told that they had made a significant error. The findings supported Staw’s theory by showing that the participants in the “negative outcomes” condition engaged in commitment bias. Moreover, there was evidence of the participants attempting to justify their behavior to themselves as well as others.8

Example 1 – Sunk Cost Fallacy

Sunk cost fallacy refers to our need to follow through with something once we’ve invested time and/or money into it. It is an example of commitment bias, as it occurs even when the outcome isn’t one we hoped for. We feel that if we don’t stay committed, our investments will have all been for nothing. This causes us to feel wasteful and to question our ability to make rational decisions. Even though it’s usually not the best course of action, it sometimes feels better to suffer through the negative consequences.

This fallacy originates from economics, where a sunk cost refers to money that has already been spent and cannot be recovered. In economics, you’re not supposed to let sunk costs factor into your decision to continue investing in something. This is sound advice, but we don’t always follow it.

For example, have your eyes ever been bigger than your stomach and caused you to order far too much food at a restaurant? And did you force yourself to eat it all, simply because you were going to have to pay for it either way? This is an example of sunk cost fallacy. You felt the need to overeat in order to get your money’s worth, even though you probably enjoyed your meal less because of it.

Example 2 – DARE

Many people could tell you about their grade school experiences with the Drug Abuse Resistance Education (DARE) Program. This program was put into place in the eighties by a police chief and a school board, with the goal of deterring teens from using drugs, joining gangs, and engaging in violence. The program was usually delivered by police officers, who preached a zero-tolerance approach to drug use, and attempted to teach children good decision making skills.

A meta-analysis examining several studies on DARE’s effectiveness found that the program has no effect on the likelihood of participants using drugs. Not only that, but it was also found that, despite its popularity, DARE is even less effective than other similar programs.9 Even more troubling are the findings from Werch and Owen, which showed that programs similar to DARE and, in one case, even DARE itself, can actually be iatrogenic, that is to say, it can actually increase the likelihood of participants using drugs.10

Despite this evidence, DARE continues to receive substantial government funding. This is an example of commitment bias as the government has remained committed to this approach to preventing drug use, when the outcomes are clearly unfavorable.

Summary

What it is

Commitment bias describes our unwillingness to make decisions that contradict things we have said or done in the past. This is usually seen when the behavior occurs publicly.

Why it happens

When our past decisions lead to unfavorable outcomes, we feel the need to justify them to ourselves and others. This results in us developing an argument supporting this behavior, which can cause us to change our attitudes toward it.

Example 1 – Sunk cost fallacy

Sunk cost fallacy is a form of commitment bias. It refers to how we feel the need to follow through with something once we’ve invested time and/or money into it.

Example 2 – DARE

Despite the evidence against its effectiveness, the government continues funding the Drug Abuse Resistance Education (DARE) Program. This is an example of commitment bias, as it illustrates our continued commitment to a cause despite its unfavorable outcomes.

How to avoid it

To avoid commitment bias, remember that it’s always a better idea to make a decision based on logic and reason than it is to do so just because it’s consistent with your past behavior. Furthermore, instead of worrying about what other people will think of you if you admit you made a mistake, focus on the good that comes from changing your behavior.