Why do we tend to think that things that happened recently are more likely to happen again?

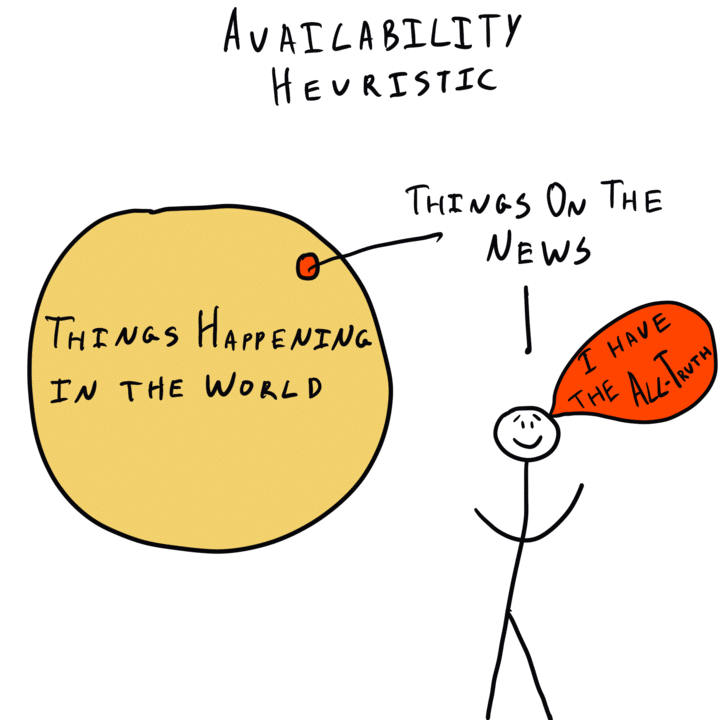

Availability Heuristic

, explained.What is the availability heuristic?

The availability heuristic describes our tendency to use information that comes to mind quickly and easily when making decisions about the future.

Where this bias occurs

Imagine you are a manager considering either John or Jane, two employees at your company, for a promotion. Both have a steady employment record, though Jane has been the highest performer in her department during her tenure. However, in Jane’s first year, she accidentally deleted a company project when her computer crashed. With this incident in mind, you decide to promote John instead.

In this hypothetical scenario, the vivid memory of Jane losing that file likely weighed more heavily on your decision than it should have. This unequal evaluation is due to the availability heuristic, which suggests that singular memorable moments have an outsized influence on decisions when compared to less memorable ones.

Debias Your Organization

Most of us work & live in environments that aren’t optimized for solid decision-making. We work with organizations of all kinds to identify sources of cognitive bias & develop tailored solutions.

Individual effects

The availability heuristic can lead to bad decision-making because memories that are easily recalled are often insufficient for figuring out how likely these things are to happen again. Ultimately, our overestimation leaves us with low-quality information to form the basis of our decisions. Meanwhile, less memorable events that contain better quality evidence to inform our predictions remain untouched. For example, many people falsely assume that driving is safer than flying since it is easier to recall vivid images of deadly plane crashes than car crashes.

Systemic effects

Acknowledging the availability heuristic forces us to reexamine what we once held true about decision-making. Many prevailing theories in behavioral economics frame humans to be rational choosers, proficient at evaluating information. In reality, each one of us analyzes information in a way that prioritizes memorability and proximity over accuracy. This startling misjudgment in mental capacity means that academics and professionals alike will have to revisit their basic assumptions about how people think and act to enhance the quality of behavioral predictions.

How it affects product

Imagine scrolling through your Instagram feed and stumbling upon an ad for a pair of sneakers. The font is bold, the images are bright, and the catch-slogan just feels right. When you are at the mall going shoe shopping weeks later, you cannot resist buying that same pair you saw before. Even though other brands may be proven to provide better traction or arch support, they do not come as easily to your mind while you browse the shelves. The vividness of that ad not only caught your eye but stuck in your brain, determining your later purchase.

Although advertising has always played on the availability heuristic—whether that be big flashy billboards along the side of the highway or repetitive postings in newspapers and magazines—online advertising has taken this to a whole new level. By tracking how users interact with the ad, such as how long we spend looking at it or the number of times we click on it, advertising algorithms will adjust to continue feeding us similar content. So if you paused to stare at that pair of shoes for a little bit too long on Instagram, the next thing you know, your entire feed will be bombarded by almost identical ads. This feedback loop perpetuates the availability heuristic by leaving you convinced this is truly the best option for sneakers when really they are just the sneakers most readily available to your mind.

Availability heuristic and AI

Although we habitually overestimate the probability of distinct events, at least the predictions calculated by AI are free of any biases… right?

Unfortunately, this is anything but the case. Supervised machine learning is susceptible to the availability heuristic, just like us. Although the algorithms themselves may be objective, the information these computations are based on is anything but. After all, it is humans that feed this software with biased samples, not the software itself. This means that AI is literally being trained to detect patterns that only exist within the datasets we provide it with, not necessarily ones that exist in the real world. And since these datasets already overestimate the probability of certainty based on memorable events, AI may inadvertently learn to do the same.

If anything, machine learning amplifies the availability heuristic even more than usual because we assume that its algorithms are resistant to prejudiced predictions. We are less likely to look out for biases in the results they generate and accept their answers as the absolute truth. Don’t let this deter you from using AI to calculate outcomes—just remember that anything designed by humans is prone to human error, too.

Why it happens

Your brain uses shortcuts

A heuristic is a “rule-of-thumb” or a mental shortcut that guides our decisions. As with any heuristic the availability heuristic helps us make choices easier and faster by drawing information from our memory. However, the tradeoff for this snap judgment is losing our ability to accurately gage the probability of certain events, as our memories may not be realistic models for forecasting future outcomes.1 Unfortunately, we fall victim to this form of miscalculation every day.

For example, if you were about to board a plane, how would you estimate the probability of crashing? Many different factors could impact the safety of your flight, and trying to calculate them all in your head would be very difficult. Provided that you didn’t google the relevant statistics, your brain may resort to your memory to satisfy your curiosity.

Certain memories are recalled easier than others

In this situation, your brain may use a common mental shortcut by drawing upon the information that comes most easily to mind. Perhaps you recently read a news article about a massive plane crash in a nearby country. The memorable headline, paired with the vivid image of a wrecked plane wreathed in flames, left an enduring impression, causing you to wildly overestimate the chance that you’ll be involved in a similar crash.

The availability heuristic exists because some memories and facts are spontaneously retrieved, whereas others take intentional effort and reflection to retrieve. Certain memories are automatically recalled for two main reasons: they appear to happen often or they leave a lasting imprint on our minds.

Some memories appear to happen more often than they do

Amos Tversky and Daniel Kahneman, two pioneers in behavioral research, demonstrated that some events seem more frequent in our memory than they are actually in real life.2 In a 1973 study, they asked participants to estimate whether more words begin with the letter K or if more words have K as their third letter.

Around 70% of the participants assumed that more words begin with K, even though a typical body of text contains twice as many words in which K is the third letter rather than the first. The students guessed incorrectly because it is much easier to think of words that begin with K (think kitchen, kangaroo, and kale) than words that have K as the third letter (think ask, cake, and biking). The availability heuristic fools us into believing that since words beginning with K are simpler to recall, there are more of them out there.

Events that leave a lasting impression seem more common

Other events leave a lingering imprint on our minds, increasing their chances of being recalled when we make decisions. Tversky and Kahneman exposed this tendency in a study conducted in 1983,3 in which half of the participants were asked to guess the chance of a massive flood occurring somewhere in North America, while the other half were asked to guess the chance of a massive flood occurring due to an earthquake in California.

Statistically speaking, the probability of flooding in California is much smaller than flooding anywhere in North America. Nonetheless, most participants predicted that the chance of a flood in California provoked by an earthquake is higher than that in all of the continents.

Using the availability heuristic, an explanation for this gross overestimation is that an earthquake in California is easier to imagine. There is a coherent story: a familiar event (the earthquake) causes the flood in a context that creates a vivid picture in one’s head (California). A large, ambiguous area like all of North America does not create a clear image, so the participants had no lasting mental imprint to draw on for making their prediction.

Why it is important

The availability heuristic has serious consequences in most professional fields and many aspects of our daily lives. People make thousands of decisions per day, and unfortunately lack the time and mental capacity to rationally calculate each one. Instead, we take mental shortcuts drawing from our memory. Biased sources like media coverage that conger emotional reactions and vivid images determine how we estimate the likelihood of events. Awareness of such intrinsic biases can safeguard against fallacious reasoning that results in undesirable judgments.

For instance, reading flashy headlines may cause us to form unconscious biases about certain minority groups committing crimes—when in reality, they are more often the target of crimes. When a school shooter shot and killed six people in a Nashville school in March of 2023, many people fixated on the fact that the perpetrator was trans. Along with other “evidence” from media outlets accusing trans people of trespassing bathrooms, viewers may start to form a misguided prejudice that those with certain gender identities are dangerous.11

In this case, the availability heuristic is perpetuating a stereotype that could not be further from the truth. It is statistically much more common for trans people to be murdered than for trans people to murder others. However, these hate crimes towards gender minorities remain unaddressed when news sources make very improbable events the most easily retrievable in our minds.

How to avoid it

The availability heuristic is a core cognitive function for saving mental effort, resulting from numerous shortcuts our brain makes to help process as much information as possible. Unlike a sleight-of-hand trick, simply knowing how it works is not sufficient for overcoming its illusion completely.4 This bias is too deeply rooted in our neural networks to completely rewire our brains to avoid it.

Although awareness of the availability heuristic alone cannot change our personal thought processes, it can help ensure systematic changes in regard to supporting or implementing policies. For instance, taking measures to identify and remove biased information in our legal system is crucial for ensuring fair treatment for everyone—especially minority groups that are disproportionately affected by the availability heuristic such as trans individuals. Just as important, we must hold media sources and newspapers accountable for their unequal reporting of events because it bears real consequences on how the general public shapes their view of the world and of each other.

System 1 and System 2 thinking

Although guaranteeing thoughtful and rigorous mental analysis sounds great in theory, it is challenging in practice. This is because our default setting is what Kahneman refers to as “System 1 Thinking,” which is fast and automatic. The availability heuristic takes advantage of System 1 because, without thorough deliberation, we rely on quick approximations that are often skewed by our memories.

Overcoming the availability heuristic requires us to instead activate “System 2 thinking,” which Kahneman defines as deliberative, careful, and reflective decision-making.5 This is often easier to do in collective decision-making because others can catch instances when an individual is captivated by superficially convincing (but ultimately false) information.

Red-teaming for debiasing the availability heuristic

A more deliberate strategy to counter the availability heuristic is called “red-teaming,” which involves nominating one member of a group to challenge the prevailing opinion, no matter what their personal beliefs are.6 Intentionally seeking out mistakes can reduce the chance that heuristics are reflexively treated as facts, which may have gone unquestioned otherwise.

In order for red-teaming or other similar initiatives to be effective, we must identify how the availability heuristic impacts collective behavior. Understanding a bias may not eliminate it completely from our decision-making. However, awareness increases the chances that we will be able to identify it in group settings when we interact with colleagues and collaborators.

Shortcuts like the availability heuristic are especially tenacious until one develops an understanding of how they work. A dedicated devil’s advocate can even fall prey to the same biases that they call out in others unless they are specifically attentive to where those biases take effect.

Combining expert insights from behavioral science with well-supported resources can prevent bad decision-making and can help increase productivity across a variety of environments. For those of us without an expert consultant on hand, educating ourselves about behavioral science is a solid first step toward leveraging its power to influence important choices.

How it all started

Tversky and Kahneman’s work in 19737 established the foundations of what we know about the availability heuristic. Originally, they described this bias as “whenever [one] estimates frequency or probability by the ease with which instances or associations could be brought to mind.” In other words, one guesses the likelihood of how often things happen by using easily recalled memories as a reference.

The concluding remarks of their paper noted that analyzing the heuristics that a person uses when making decisions can predict whether their judgment will overestimate or underestimate probability. Everyday life is filled with uncertainty due to the seemingly infinite number of decisions and information that our brains process, which explains why understanding common shortcuts is so important. By being aware of the availability heuristic, humans can begin to make fewer errors when faced with uncertain conditions.

Example 1 – Lottery winners

After watching a documentary series or seeing a plethora of advertisements about the luxurious lives of lottery winners, you might mistakenly think that your chances of winning are higher than they actually are. The vivid images of decked-out mansions and brand-new sports cars leave a strong impression on your mind, which ultimately will help their ease of recall. Later that day, you are feeling lucky, so you buy a Lotto 6/49 ticket with a $40 million jackpot prize.

Due to excessive media exposure dedicated to covering the lottery, you figured you had a decent chance of winning—after all, those winners were regular people just like you before buying that lucky ticket. However, you forget your statistics homework assignment from a few years earlier where you calculated the odds of winning the 6/49 lottery as 1 in 13,983,816.8 Unfortunately, your ticket does not end up winning, which may not have surprised you if you could more easily recall the actual odds you were up against.

Example 2 – Drug use and the media

A study conducted by Russell Eisenman in 19939 examined how media coverage of specific topics can impact people’s perceptions via the availability heuristic. In this study, college students were asked to predict whether drug use in the United States was increasing or decreasing. To no surprise, participants were more likely to guess that drug use was skyrocketing, despite reputable survey data from the National Household Survey on Drug Abuse that claimed otherwise. Eisenman cited a 1984 study by Tyler and Cook10 which concluded that constant media coverage of certain topics like drug use can distort perceptions of how often those events occur in the real world.

The key idea is that news stories about sensationalized and relatively rare topics such as drug overdoses or plane crashes can evoke the availability heuristic. People wildly overestimate the chance that these events happen compared to other deadly events that are statistically more likely, such as heart disease or car accidents. Depending on the sources you watch and read, your decisions could be based on heavily biased information.

Summary

What it is

The availability heuristic describes the mental shortcut where we make decisions based on emotional cues, familiar facts, and vivid images that leave an easily recalled impression in our minds.

Why it happens

The brain tends to minimize the effort necessary to complete routine tasks. When making decisions—especially ones involving probability—certain memories and knowledge jump out at us to replace the demanding task of calculating statistics. Some memories leave a lasting impression because they connect to emotional triggers. Others seem familiar because they align with the way we process the world, such as recognizing words by their first letter. Either way, we tend to overestimate how often these memories come more easily to mind happen, even if they are quite rare.

Example #1 - Lottery winners

We might buy a lottery ticket because the lifestyle that follows winning comes to mind easily and vividly. On the other hand, the likely chances of losing do not come to mind while standing at the ticket counter.

Example #2 - Drug use and the media

Sensational news stories seem much more likely to occur than unremarkable (yet dangerous) activities. The availability heuristic skews the distribution of fear towards events that leave a lasting mental impression due to their graphic content or unexpected occurrence versus comparatively dangerous and probable events that are not as distinctive.

How to avoid it

The best way to avoid the availability heuristic on a small scale is to combine expertise in behavioral science with dedicated attention and resources to locate the points where it takes hold of individual choices. On a larger scale, the solution remains similar. Dedicating a specialized team to focus on the role of heuristics in public policy, institutional behavior, or media output can achieve more logical outcomes wherever human behavior is concerned.

Related TDL articles

A Nudge A Day Keeps The Doctor Away

This article examines how nudging can be used to help drive desirable outcomes in the medical field, from increasing organ donors to reducing the use of misprescribed antibiotics. The author notes that the availability heuristic can get in the way of our efforts to stay healthy, such as when we remember that taking a specific screening test in the past hurt. This can be harmful if it causes us to avoid potentially helpful screening tests in the future.

How to Protect An Aging Mind From Financial Fraud

This article explores how elderly individuals are more likely to be victims of financial fraud from a behavioral science lens, and how this deception can be prevented. The author notes that elderly individuals may be more susceptible because they tend to think more in the present, which can increase their vulnerability in financial decision-making environments.