Bayesian Network

The Basic Idea

Uncertainty is a fact of life. However, the existence of uncertainty does not mean we can’t make any predictions about cause and effect relationships. Probability theory suggests that although we cannot be certain about a single outcome of a random event, we can predict the probability of a number of possible outcomes.1 Probability theory is about making informed inferences in the face of uncertainty.

A Bayesian network is a probabilistic graphical model. It is used to model the unknown based on the concept of probability theory. Bayesian networks show a relationship between nodes - which represent variables - and outcomes, by determining whether variables are dependent or independent. A Bayesian network works backwards, by looking at an event and suggesting possible variables that led to it. In other words, a Bayesian network provides information about probabilities regarding causes and effects of events.

For example, if you were to observe that the grass is wet, you might ask, “What is the probability that it is wet because it is raining?” To figure out the probability, you would have to calculate how often the cause of wet grass is rain, which also means knowing how often the grass is wet for a different reason (such as the sprinkler being turned on). Since the sprinkler being turned on is also dependent on whether or not it rains, a Bayesian network would map out the various conditional variables and respective probabilities.2

Under Bayes’ theorem, no theory is perfect. Rather, it is a work in progress, always subject to further refinement and testing.

– American statistician Nate Silver3

Theory, meet practice

TDL is an applied research consultancy. In our work, we leverage the insights of diverse fields—from psychology and economics to machine learning and behavioral data science—to sculpt targeted solutions to nuanced problems.

Key Terms

Probability Theory: a branch of mathematics that examines the relationship between random phenomena. It determines how likely an event will occur by dividing its frequency in the outcome pool by the total number of potential outcomes. To determine the likelihood of a coin landing on heads, for example, probability theory would divide the frequency of “heads” in the pool (1) by the number of possible outcomes (2: heads or tails), yielding a 1 in 2 chance.4

Nodes: in a Bayesian network, each note is a distinct random variable.2

Directed Acyclic Graphs: displays assumptions about the relationship between variables (nodes). In directed acyclic graphs, the relationships are always unidirectional. They move from cause to effect only. Importantly, acyclic graphs do not have feedback loops: descendant nodes do not impact parent nodes. Essentially, parent nodes are variables higher up on the graph that impact what is below (descendant nodes).5

Feedback Loop: loops occur in graphical networks when descendant nodes also impact parent nodes.

Markov Condition: an assumption made in Bayesian networks that parent nodes are independent of their descendant nodes. This assumption is why the network is an acyclic graph with unidirectional links.6

History

Thomas Bayes was an English mathematician in the 18th century. He developed a mathematical equation for determining how likely an event is to occur, based on the frequency of its occurrence in the past.7 This equation became known as inverse probability.

During his lifetime, Bayes did not publish much of his work, however, posthumously, in 1762, his paper “Essay Towards Solving a Problem in the Doctrine of Chances” was published and outlined the basis of what came to be known as Bayes’ theorem.7

Bayes’ theorem suggests that in order to determine the probability of an event occurring, we must incorporate prior knowledge of conditions that might be related to the event. It is essentially a way to figure out the conditional probability: What is the likelihood of X occurring, given that Y occurred? By incorporating this prior knowledge of related variables, Bayes’ theorem is able to make informed inferences, instead of suggesting that everything is equally random. It suggests that probabilities of events should be adjusted depending on available information.

To use Bayes’ theorem, you would start with a hypothesis and a degree of belief in your hypothesis’s accuracy. As you collect more information or data relating to the hypothesis, you can adjust your degree of belief.8 For example, Bayes’ theorem might be used to determine the likelihood of a patient having cardiovascular disease if they are experiencing chest pain. Originally, the hypothesis might be that there is a 10% chance that the patient has a cardiovascular disease, because 10% of patients who come in complaining about chest pain are found to have such a disease. However, more information can increase the hypothesis’s level of belief.

Cardiovascular diseases are more common in older patients. If the patient in question is 60+, you would have to adjust the hypothesis to reflect how many patients over 60 are diagnosed with a cardiovascular disease after experiencing chest pain. The probability of the patient having a cardiovascular disease might shift to 12% in light of their age. That number can continue to change depending on what other information becomes available - for example, whether they lead an active lifestyle, whether they are a smoker, or whether cardiovascular diseases run in their family.

Bayes’ theorem incorporates multiple different variables into the development of a hypothesis and can be reflected on a probabilistic graphical model. So was born the Bayesian network, which helps represent the theorem.

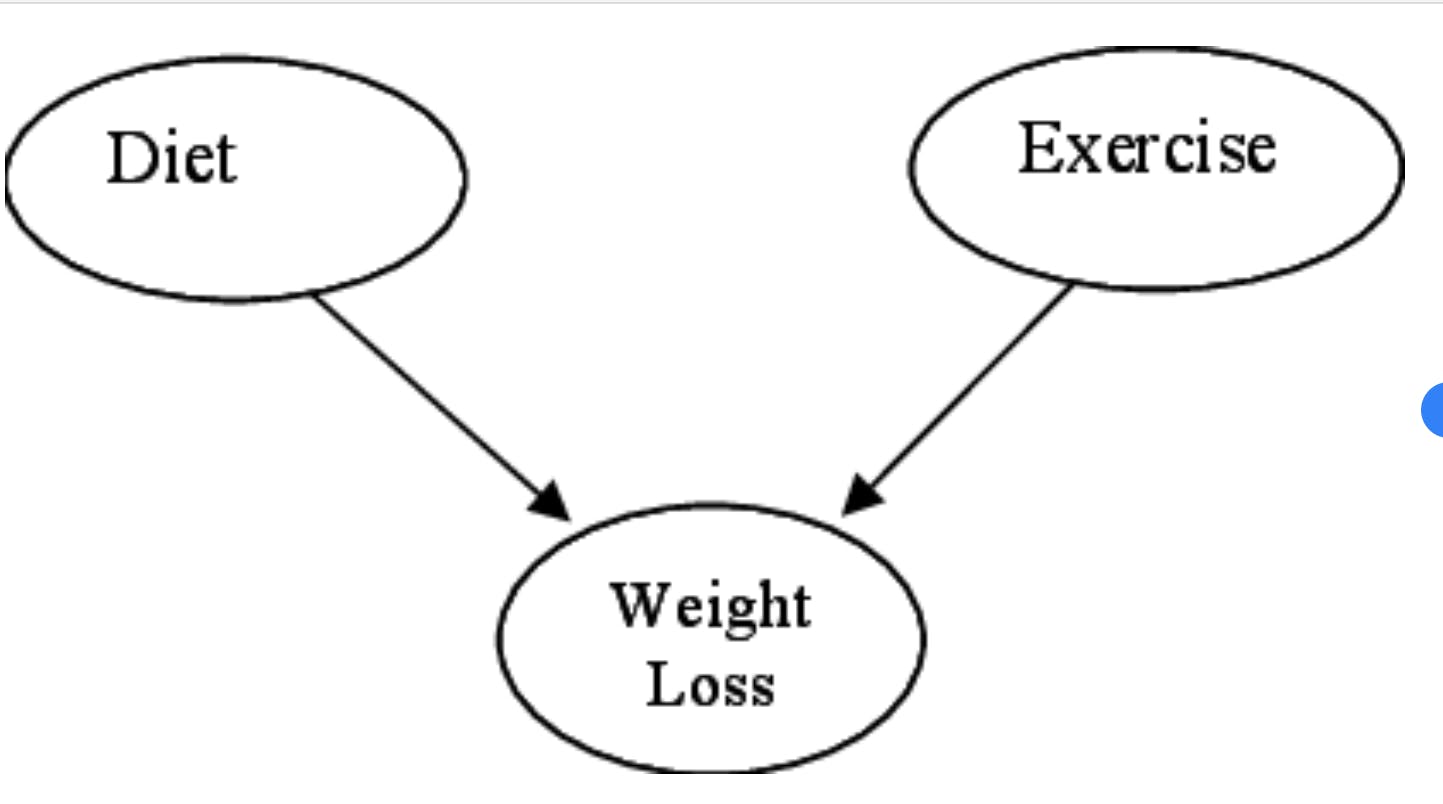

A main principle of Bayesian networks is that they must satisfy the Markov condition. ‘Parent’ nodes impact their descendants, but parent nodes do not impact one another. For example, in the following simple Bayesian network 9 ‘Diet’ and ‘Exercise’ are parent nodes. Although diet and exercise might be correlated, they do not have a causal relationship (exercising does not cause you to have a different diet, nor vice versa). These parent nodes are conditionally independent, but both impact their descendant node, weight loss.

Bayesian networks grew in popularity in the 1980s as medical researchers began to understand that many conditions, such as medical diagnosis, did not yield certain conclusions. Diagnostic tools are never 100% accurate, meaning that the probability of a patient having a particular disease is not based only on the frequency of the disease, but also on the accuracy of the diagnostic test.

Luckily, a Bayesian network could account for all these different variables. At the same time, researchers in the artificial intelligence community also began to adopt Bayesian networks, so that uncertainty could be incorporated into knowledge-based systems.10 The term Bayesian network was officially coined by Israeli-American computer scientist Judea Pearl in 1985.11

Consequences

Bayesian networks are thought to accurately reflect real life because uncertainty is incorporated into their predictive model. Bayesian networks show that even though variables are random, there are ways we can make informed predictions about probabilities. Moreover, the graphical representation of a Bayesian network can make complex probability mathematics easier to follow. As a model that allows researchers to adjust their hypothesis in the face of new evidence, it can also prevent us from falling victim to confirmation bias.

The impact of Bayesian networks is proven by its 2004 ranking at #4 on Massachusetts Institute of Technology’s “10 Emerging Technologies that Will Change Your World” list.11 Using Bayesian networks can simplify data analysis. The networks are relatively easy to understand, meaning everyday people can use them to determine the probability of causal relationships. 12 As a result, uncertainty doesn’t mean we have to make decisions completely in the dark. If the grass is wet, we can infer the likelihood that it has rained, and therefore make an informed decision about whether or not to carry an umbrella, depending on the likelihood that it will rain again.

Controversies

One critique of Bayesian networks is that because they are directed acyclic graphs, they do not allow for feedback loops. This lack can be an issue when the model is used to display information about biology, specifically because our bodies often function as a response to feedback loops.

Homeostasis - our bodies’ regulation of internal functioning - is one example of a biological feedback loop in which descendant nodes would impact parent nodes. For example, goosebumps are an effect of being cold. In a Bayesian network, goosebumps would be a descendant node, and the cold feeling would be the parent node. However, goosebumps then impact the likelihood that you are cold, since they warm you up. A Bayesian network does not account for this bilateral direction of cause and effect.13

There are other probability models that function differently to Bayesian networks, like neural networks. Neural networks allow for correlations between input variables, unlike Bayesian networks.14 Instead of basing themselves on the probabilities of independent variables only, neural networks work by teaching the system how to differentiate between different variables.

For example, if you want to create a program able to differentiate between images of squares and images of circles, you would input many different examples of circles and squares and classify them as such. The machine would then, hopefully, learn by itself what properties it should examine in order to categorize incoming shapes. Essentially, neural networks work from inputs to outputs, whereas Bayesian networks work from outputs and try to trace causes back to inputs.

Predicting Election Results

American statistician Nate Silver rose to fame after he correctly predicted not only that Barack Obama would win the 2012 U.S. Presidential election, but also the voting outcome of every single state.15 How did this previously unknown blogger make these wildly accurate predictions, even when the media claimed the race was about even? All thanks to Bayesian networks.

The way that the U.S. presidential election functions is hierarchical, which makes it perfect for a Bayesian network that assumes that parent nodes impact descendant nodes, but not vice versa. In order to win the election, candidates must win the most states. States are therefore the parent nodes that impact the descendant node: the outcome of the election.

Silver collected data months prior to voting on how people thought they would vote. Of course, there can always be discrepancies between how people think they will vote and how they actually vote. Luckily, that did not pose an issue for Silver, because Bayes' theorem allows shifts in hypothesis depending on new information collected.

Silver started off with a ‘nowcast’, which determined the probability of the outcome of each state if voting was to happen on any given day. Various variables impacted this decision: the socioeconomic status of the population of each state, its racial makeup, and its voting history, among others. These variables gave Silver an initial prediction of who would win each state. Then, as time went on, Silver incorporated new incoming data. For example, if unemployment rates changed in a state, he considered it a factor and updated his predictions.15

Silver generated the probability that Obama would win at different times throughout the election period. As election day grew nearer, more and more polling data emerged, giving Silver confidence in his predictions. It was through mapping all the variables onto Bayesian networks that Silver was able to correctly predict the outcome of the 2012 election.15

Medical Diagnosis Uncertainty

Unfortunately, the diagnostic tests are never 100% accurate. Thankfully, Bayesian networks account for this uncertainty. Bayesian networks understand that whether test results are not the only important variable when it comes to diagnosis. The frequency of false positives and false negatives also influences the likelihood of diagnosis .

Bayesian networks could be useful to figure out accurate numbers of COVID-19 infection and mortality rates. A group of researchers conducted a study suggesting that globally reported statistics of COVID-19 do not take into account the uncertainty of data.17 These statistics simply use how many people tested positive as the infection rate figure.

Using a Bayesian network, the researchers examined how many times positive and negative tests were actually false and adjusted the infection rate accordingly. Different tests have different accuracy rates, which means that the variable for whether or not someone has COVID-19 is not solely dependent on the test result.

Figuring out the false positives and negatives is also important for determining fatality rates. If someone died and previously had been tested positive for COVID-19, the likelihood that the COVID test had been accurate is obviously increased. As a result of employing a Bayesian network model, the researchers concluded that infection rates are actually higher than popular statistics suggest, but mortality rates are lower than reported.17

Related TDL Content

AI, Indeterminism, and Good Storytelling

Being able to predict car accidents is useful for car insurance companies, as predictions inform them of their likely costs and therefore forecast how much they charge customers. These probabilities are not deterministic; they cannot tell you why car crashes occur, but only that they do occur. In this article, TDL Research Director Brooke Struck outlines how artificial intelligence systems are beginning to incorporate the same kind of indeterministic probability modelling, and why this feels uncomfortable for humans who like more causal explanations.

Decision Biases Amongst Lawyers: Conjunction Fallacy

Whether or not we believe an event happened is impacted by the way its narration is framed. According to the conjunction fallacy, the more precise detail given in the description of an event, the more probable people tend to find its occurrence. In this article, our writer Tom Spiegler explores how the conjunction fallacy impacts lawyers, judges, and court decisions.

Sources

- Siegmund, D. O. (2005, September 9). Probability theory. Encyclopedia Britannica. https://www.britannica.com/science/probability-theory

- Kim, A. (2019, November 1). Conditional Independence — The backbone of Bayesian networks. Medium. https://towardsdatascience.com/conditional-independence-the-backbone-of-bayesian-networks-85710f1b35b

- Bayes Quotes. (n.d.). Goodreads. Retrieved April 13, 2021, from https://www.goodreads.com/quotes/tag/bayes#

- Probability: the basics. (n.d.). Khan Academy. Retrieved April 13, 2021, from https://www.khanacademy.org/math/statistics-probability/probability-library/basic-theoretical-probability/a/probability-the-basics

- Barrett, M. (2021, January 11). An Introduction to Directed Acyclic Graphs. The Comprehensive R Archive Network. https://cran.r-project.org/web/packages/ggdag/vignettes/intro-to-dags.html

- Silver, L. (2016, August 20). Causal Markov Condition Simple Explanation. Cross Validated. https://stats.stackexchange.com/questions/230897/causal-markov-condition-simple-explanation

- Routledge, R. (2005, November 10). Bayes's theorem. Encyclopedia Britannica. https://www.britannica.com/topic/Bayess-theorem

- Sanchez, F. (2017, November 2). Introduction to Bayesian Thinking: from Bayes theorem to Bayes networks. Medium. https://towardsdatascience.com/will-you-become-a-zombie-if-a-99-accuracy-test-result-positive-3da371f5134#_=_

- McKevitt, P. (2020, September 3). Figure 2.33: Example of a simple Bayesian network. ResearchGate. https://www.researchgate.net/figure/Example-of-a-simple-Bayesian-Network_fig13_228776941

- Neapolitan, R., & Jiang, X. (2016). The Bayesian Network Story. In A. Hájek & C. Hitchcock (Eds.), The Oxford handbook of Probability and Philosophy. Oxford University Press, USA. https://doi.org/10.1093/oxfordhb/9780199607617.013.31

- What is a Bayesian Network? (n.d.). The BayesiaLab Knowledge Hub. Retrieved April 13, 2021, from https://library.bayesia.com/articles/#!bayesialab-knowledge-hub/bayesian-belief-network-definition-2850990

- Friedman, N., Goldszmidt, M., Heckerman, D., & Russell, S. J. (1997). Challenge: What is the Impact of Bayesian Networks on Learning? In Proceedings of the 15th international joint conference on Artificial intelligence (pp. 10-15).

- The Albert Team. (2020, June 1). Positive and Negative Feedback Loops in Biology. Albert Resources. https://www.albert.io/blog/positive-negative-feedback-loops-biology/

- Gupta, C. (2016, December 7). What is the difference between a Bayesian network and an artificial neural network? Quora. https://www.quora.com/What-is-the-difference-between-a-Bayesian-network-and-an-artificial-neural-network

- O'Hara, B. (2012, November 8). How did Nate Silver predict the US election? The Guardian. https://www.theguardian.com/science/grrlscientist/2012/nov/08/nate-sliver-predict-us-election

- Neil, M., Fenton, N., Osman, M., & McLachlan, S. (2020). Bayesian network analysis of Covid-19 data reveals higher infection prevalence rates and lower fatality rates than widely reported. Journal of Risk Research, 23(7-8), 866-879. https://doi.org/10.1101/2020.05.25.20112466